We are ready to assist you

Hardw/AI: Why 192 GB of VRAM Is the New “Sanitary Minimum” for Inference

By the end of 2025, the industry hit a breaking point: CAPEX for model operation (Inference) surpassed training costs for the first time. However, deploying Dense 400B+ models (e.g., Llama 3.1 405B) in commercial environments met harsh physical limits: the era-standard 80 GB of memory on H100 became a bottleneck killing project economics.

Below is an analysis of why memory has become more critical than FLOPS, and an honest look at B200 and MI325X specs without marketing embellishments.

Anatomy of the “Memory Wall”

In LLM inference we fight for two metrics: Time To First Token (TTFT) and Inter-Token Latency (ITL).

Golden rule: hot data (Weights + KV Cache) must reside in HBM.

If the model does not fit into VRAM and CPU Offloading begins, catastrophe follows:

- Bandwidth abyss: You drop from HBM3e (~8 TB/s) to DDR5 (~400–800 GB/s per socket). A 10–20× difference.

- Generation collapse: Speed plummets from 100+ tokens/sec to 2–3 tokens/sec — unacceptable for chatbots, copilots or real-time RAG pipelines.

KV Cache: The hidden memory devourer

Many forget that model weight size (e.g., ~810 GB for 405B in FP16) is only half the issue. The second half is the Context Window.

At 128k tokens of context and a Batch Size of 64, the KV cache may consume hundreds of gigabytes.

- Reality: Even with aggressive FP8 quantization, long contexts push KV cache to evict model weights.

- Implication: You don't just need to “fit the model”—you need 30–40% memory headroom for dynamic per-session caches.

Specification Battle: B200 vs MI325X (Fact-check)

The market responded with chips featuring increased memory density. Yet it is crucial to separate real datasheets from marketing slides.

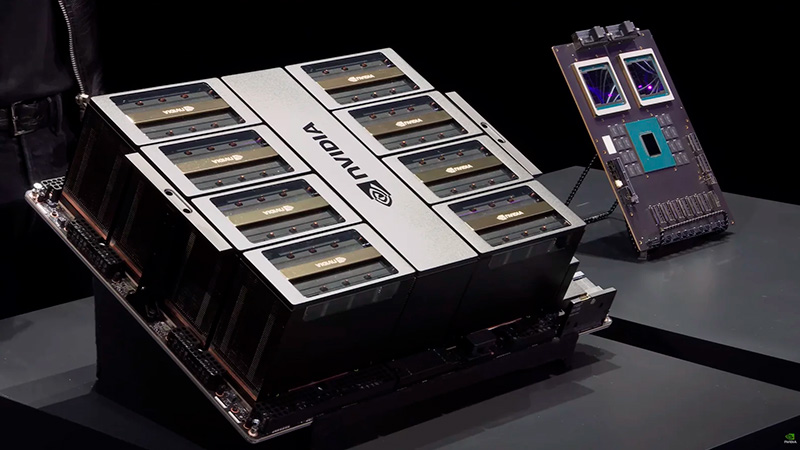

1. Nvidia B200 (Blackwell)

Marketing claims 192 GB of HBM3e.

Engineering reality: Physically the chip does contain 192 GB (8 stacks × 24 GB). However, in production systems (HGX/DGX) the user typically gets 180 GB — the remaining 12 GB are reserved for ECC and yield enhancement.

- Verdict: A major upgrade over the H100’s 80 GB, but for 400B+ models on a single node (8× GPU), sharding strategy remains crucial.

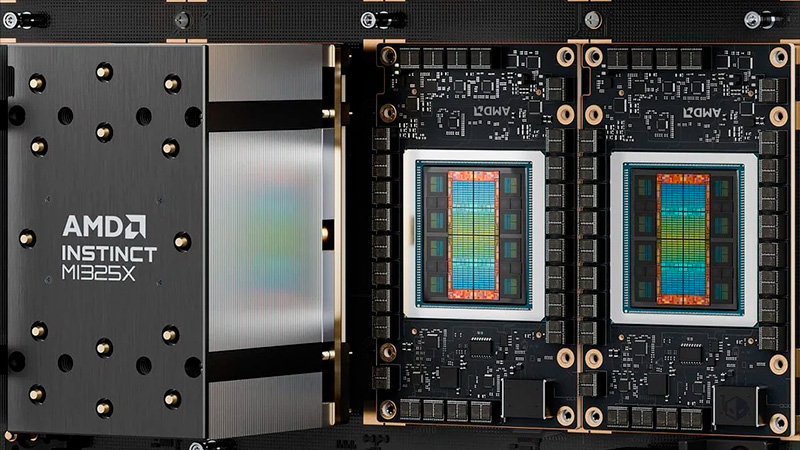

2. AMD Instinct MI325X

An incorrect number — 288 GB — has been circulating online.

Engineering reality: MI325X (late-2025 flagship) has 256 GB of HBM3e. The 288 GB figure belongs to the upcoming generation (CDNA 4), which is not yet commercially available.

- Verdict: Even at 256 GB, AMD leads in Memory per GPU. This allows: — hosting Llama 3.1 70B on a single GPU with huge context headroom — hosting 405B on 4 GPUs instead of 8.

| Characteristic | Nvidia B200 | AMD Instinct MI325X |

|---|---|---|

| Memory (Usable) | ~180 GB HBM3e | 256 GB HBM3e |

| Bandwidth | ~8 TB/s | ~6 TB/s |

| Typical Usage | Maximum performance (FP4/FP8), proprietary CUDA stack. | Maximum capacity (RAG, Long Context), open ROCm stack. |

2026 Trends: The Battle with Inter-GPU Link

Why are we fighting so hard for memory per chip?

To run a GPT-4/5-class model, we use Tensor Parallelism, slicing model layers across 8 or 16 GPUs.

The issue: even NVLink Gen-5 adds latency. Fewer GPUs required → faster inference → lower TCO. In 2026, the winner won’t be who has more FLOPS, but who can run a “fat” model on the fewest chips.

HYPERPC Conclusion

At HYPERPC, we see businesses shifting from “training at any cost” to efficient inference. The race for model parameters has become a race for VRAM.

For our enterprise clients deploying on-prem LLMs, we recommend the following strategy:

- Do not save on VRAM: If your task involves large-document RAG, choose GPUs with maximum memory. Memory shortage cannot be compensated by a CPU.

- Balance the ecosystem: Nvidia B200 remains the standard for complex CUDA-based pipelines, but AMD’s 256 GB GPUs are extremely compelling for long-context workloads.

- Use ready-made platforms: Building such clusters manually risks cooling and power issues. Our HYPERPC AI & HPC servers are engineered for 700W+ thermal envelopes, ensuring stable 24/7 operation without throttling.

Invest in memory today to avoid paying for idle servers tomorrow.

Sources and Technical Documentation

This article is based on data from official specs and engineering blogs:

- Nvidia Blackwell Architecture Technical Brief Nvidia Technical Blog / Datasheets — Details on B200 architecture, memory specs, and NVLink.

- AMD Instinct MI325X Specifications AMD Official Website - Instinct Accelerators — Confirmation of 256 GB HBM3e.

- Meta Llama 3.1 Hardware Requirements Meta AI Engineering Blog — Memory requirements for 405B models (FP16/FP8).