- Introduction

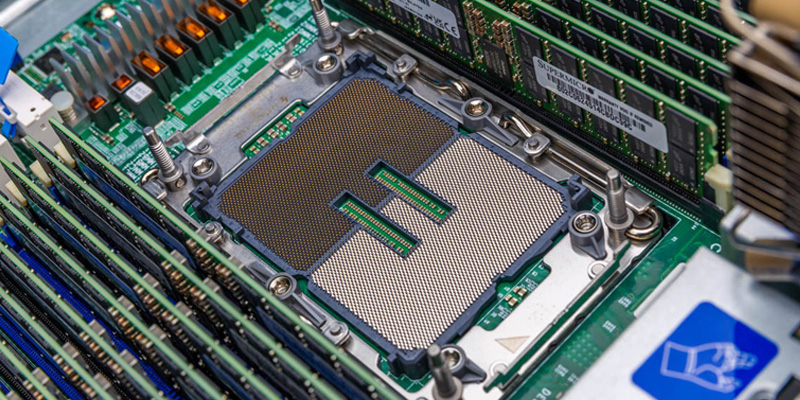

- Processors

- Graphics Cards

- CPU Comparison

- Server Platforms

- Memory

- Use Cases

- Conclusion

- Frequently Asked Questions

- Additional Resources

We’re ready to help you

Server processors 2025: a comparison of AMD EPYC, Intel Xeon, and ARM solutions for data centers and pro workstations.

Server processors: overview and outlook through 2025

In an industry where performance and energy-efficiency metrics directly determine competitive advantage and operating costs, selecting a server CPU is a strategic decision. By 2025, the server processor market is defined not just by technical rivalry, but by fundamentally different architectural design philosophies offered by the key players. While classic x86 solutions from AMD and Intel continue to evolve—delivering record multithreading and specialized acceleration—alternative ARM architectures are steadily taking root in specific niches, offering unprecedented efficiency.

This article provides a comprehensive technical analysis of modern server CPUs, their platforms, and optimal usage scenarios—needed to build a sound investment and operations strategy for data centers and professional workstations.

Introduction

The modern server landscape has transformed radically. If Intel Xeon’s dominance once served as an almost uncontested standard for data centers, AMD’s return to the high-performance segment in 2017 ushered in a new era of competition. Today, AMD EPYC is not merely catching up—across many areas it sets new benchmarks, especially in multithreaded workloads and compute density.

In parallel, a third force is gaining momentum: ARM-based processors that have moved from mobile devices into server racks, promising a revolution in performance per watt. This overview aims to provide a clear, marketing-free comparison of the key 2025 platforms—AMD EPYC, Intel Xeon, and promising ARM solutions—focusing on practical value for specialists making procurement and infrastructure deployment decisions.

Where the data comes from

The analysis is based on aggregated data from authoritative industry sources, including technical reviews, official vendor specifications, and independent benchmark results. Key sources include publications from expert IT media and hosting providers (such as King Servers and Itelon) that provide practical deployment case studies.

Data from resources specializing in comparative hardware testing (for example, CPUBenchmark.net) and official press releases from manufacturers were also considered. All conclusions and recommendations focus on the market state in 2024–2025 and aim to maximize end-user value when planning infrastructure projects.

Processors

Intel

Intel Corporation, long synonymous with the term “server CPU,” continues to develop its flagship Xeon lineup, betting on a mature ecosystem, strong single-thread performance, and built-in hardware accelerators for specific workloads.

Scalable Intel Xeon for servers

The current Intel Xeon Scalable generation, represented by the Sapphire Rapids and Granite Rapids architectures, remains a cornerstone for many enterprise environments. These processors, manufactured on Intel 7 (10 nm), offer up to 60 high-performance cores (P-cores) and 120 threads per socket in Sapphire Rapids configurations. Their key strength is not only raw compute, but also a rich set of integrated engines: Intel Advanced Matrix Extensions (AMX) for AI inference and machine learning, Intel QuickAssist Technology (QAT) for accelerating encryption and data compression, and Intel Data Streaming Accelerator (DSA).

This makes Intel Xeon a preferred choice for workloads where not just multithreading matters, but optimization for specific—often legacy—enterprise applications such as SAP HANA or complex databases. In multi-socket configurations (up to 4–8 CPUs), the Xeon platform demonstrates proven reliability and mature inter-CPU communication mechanisms, which is critical for vertical scaling of large monolithic systems.

Intel Xeon W

The Intel Xeon W lineup targets the high-end professional workstation market, where maximum compute resources are required within a one- or two-socket platform. These CPUs, such as the W9-3595X, combine Xeon’s architectural advantages (ECC memory support, enterprise management features) with high clock speeds.

They are built for rendering, CAD design, scientific simulation, and data analysis—workloads where both parallel processing and single-thread responsiveness matter. However, as independent tests show, in pure compute tasks such as V-Ray or KeyShot rendering, AMD’s flagship solutions can outperform by more than 100%.

Intel Xeon E

The budget Intel Xeon E lineup (formerly Xeon E3) occupies the entry-level server niche and small-business starter systems. These processors, often sharing a platform with desktop Core CPUs, provide a basic set of server features—ECC memory support and increased reliability—at a significantly lower total cost of ownership.

They are optimal for file servers, backup systems, hosts with low virtualization density, or office infrastructure where extreme multithreading or advanced scalability is not required.

Intel Core

Using Intel Core processors (especially flagship i9 series) in server solutions is a niche but real practice, mostly for specialized tasks or very tight budgets. Such systems lack key enterprise features, primarily ECC memory support, which raises concerns about data integrity under prolonged high load.

Their use can be justified in test environments, for individual container deployments, or for workloads that are highly sensitive to single-core frequency. But for production data-center workloads, choosing Xeon is effectively non-negotiable from a reliability and fault-tolerance standpoint.

AMD

AMD made an impressive leap into the server market by delivering an architecture focused on maximum core counts, high subsystem bandwidth, and energy efficiency. A chiplet-based strategy allowed AMD to rapidly increase compute density.

Scalable AMD EPYC for servers

AMD’s flagship server platform, represented in 2025 by the 9004 (Genoa, Bergamo) and 9005 (Turin) series, is Intel Xeon’s main competitor. Its defining trait is record core and thread counts within a single socket. For example, the EPYC 9754 (Zen 4c) offers 128 cores and 256 threads, while top Turin models reach up to 192 cores.

In a direct comparison, a dual-socket AMD EPYC configuration can deliver roughly 30–40% more total compute than a comparable dual-socket Intel Xeon build. Beyond cores, the platform’s advantages include 12 channels of DDR5 memory per socket (vs 8 on Intel) and 128 lanes of PCIe 5.0. This makes EPYC an ideal solution for highly parallel workloads: virtualization, cloud environments, in-memory databases, high-performance computing (HPC), and big data processing.

AMD Ryzen Threadripper

For professional workstations, AMD offers Ryzen Threadripper and its pro variant, Threadripper PRO. These processors, such as the flagship Threadripper PRO 9995WX, effectively bring the EPYC philosophy (huge core counts, multi-channel memory, lots of PCIe lanes) into a workstation form factor. With 64–96 cores, 8-channel DDR5 support, and up to 128 PCIe 5.0 lanes, they are unmatched in rendering, 3D modeling, compositing, and simulation workloads.

As AMD notes, in rendering tests (Chaos V-Ray, Luxion KeyShot), Threadripper PRO demonstrates an advantage of more than 100% over competing Intel Xeon W solutions. This makes them the best choice for creative studios, engineering firms, and research centers.

AMD EPYC 4004

The AMD EPYC 4004 series represents the company’s strategy to capture the entry- and mid-level single-socket server market. Built on the same Zen 4 architecture as its bigger siblings, but in a more accessible form factor, these processors deliver impressive multithreaded performance for their price class. They are ideal for SMBs, hosting providers, infrastructure services (file storage, domain controllers, web servers), and dense containerization—offering an excellent price-to-capability balance without the overhead of dual-socket platforms.

AMD Ryzen

Using desktop AMD Ryzen processors for server purposes, as with Intel Core, is a compromise. Some Ryzen 9 models with high core counts can look attractive for home labs, test benches, or low-power edge deployments. However, the lack of ECC support (in most models), limited reliability, and limited management capabilities make them unsuitable for any mission-critical workload in a commercial data center.

ARM vendors

The ARM architecture, long associated with mobile devices, has made a decisive breakthrough into the infrastructure processor market. Its philosophy, centered on energy efficiency and scalability, resonates with the largest cloud providers and data-center operators for whom electricity is a major component of operating expenses.

Ampere

Ampere Computing is one of the most active proponents of ARM architecture in the general-purpose server CPU segment. Its chips, such as Ampere Altra and Ampere One, offer up to 128+ homogeneous high-efficiency cores without support for technologies like SMT/Hyper-Threading.

This delivers predictable performance and low power consumption. Estimates suggest ARM solutions can provide up to 40% energy savings compared to traditional x86 CPUs in similar cloud and web-hosting workloads. The primary use cases are scalable web services, container platforms like Kubernetes, caching layers, and microservices architectures where density and efficiency matter.

NVIDIA Grace

NVIDIA, a leader in AI and HPC, introduced its ARM-based Grace processor, designed not as a general-purpose solution but as a highly optimized platform for AI and supercomputing. Grace features a unique architecture with ultra-fast NVLink-C2C connectivity between CPU and GPU, which is critical for exaflop-scale computing. It is intended to pair with NVIDIA Hopper and Blackwell accelerators, forming a unified, balanced compute platform for training and inference of the most complex neural network models.

Amazon Graviton

Amazon Web Services (AWS) demonstrates an ecosystem approach with its Graviton processor. Built specifically for the AWS cloud, Graviton (third and fourth generations) offers best-in-class cost/performance optimization across a wide range of AWS services such as EC2, RDS, ElastiCache, and others. For AWS customers, choosing Graviton instances often translates into direct savings of 20–40% at comparable or higher performance for cloud-native applications built for ARM.

RISC-V processors

The open and modular RISC-V architecture represents the most ambitious long-term challenge to both x86 and ARM. Its key advantage is the absence of licensing fees and the ability to deeply customize a chip for specific tasks. By 2025, RISC-V is taking its first confident steps into the server market. Companies such as Ventana Micro Systems have already announced 192-core processors intended for data centers.

For now, the main barrier is the maturity of the software ecosystem: operating systems, hypervisors, developer tooling, and business applications require porting and optimization. However, for specific highly specialized workloads (network devices, storage systems, accelerators), RISC-V can already offer unprecedented efficiency.

Graphics cards

In modern high-performance servers and workstations, the CPU is only part of the compute equation. Graphics accelerators (GPUs) and other specialized coprocessors handle tasks related to parallel data processing, artificial intelligence, scientific simulations, and professional visualization.

NVIDIA

Server GPUs

NVIDIA’s server GPU lineup, led in 2025 by Hopper (H100, H200) and Blackwell (B100, B200), is the de facto standard for AI and HPC. These accelerators feature thousands of specialized Tensor Cores for machine learning, high HBM3e memory bandwidth, and NVLink interconnects to combine multiple cards into a single logical accelerator.

They are critical for training large language models (LLMs), recommender systems, quantum chemistry workloads, and climate modeling. Compatibility with CPU platforms from all major vendors (x86 and ARM) makes them a universal tool.

Desktop GPUs

The GeForce RTX lineup, including 5000-series models, dominates the workstation market for creative workloads. Technologies like CUDA, OptiX for ray tracing, and specialized AI cores (Tensor Cores) accelerate applications for rendering, 3D modeling, simulation, and video workflows. Paired with powerful multithreaded CPUs such as AMD Threadripper, they create unmatched-performance workstations for content creators.

AMD

AMD Instinct

AMD’s Instinct accelerator series (MI300X, MI325X) is a direct alternative to NVIDIA solutions for data centers. Based on the breakthrough CDNA 3 architecture with a chiplet design combining CPU and GPU, they offer exceptional performance in HPC and AI workloads. A key advantage is the open ROCm software ecosystem, providing developers more flexibility and control. These cards are used in supercomputer clusters and large cloud infrastructures seeking supplier diversification.

AMD Radeon

In professional workstations, AMD Radeon PRO GPUs (W7000, W8000 series) deliver stable performance for CAD workloads, BIM modeling, media creation, and geospatial analysis. Their strengths include reliability, multi-monitor support, and optimization for specific professional applications.

Intel

After returning to the discrete graphics market, Intel offers server and workstation solutions in the form of Intel Data Center GPU Flex and Max Series. These accelerators target cloud visualization (Cloud Gaming, VDI), media transcoding, and mid-tier inference workloads. Integration with Intel Xeon through technologies like oneAPI provides a unified programming model that may simplify development and deployment. While their market share remains small, they represent an important third force that helps increase competition.

A modern server or workstation is always a balanced system. CPU choice (AMD EPYC, Intel Xeon, or ARM) directly impacts overall system requirements: the number and type of PCIe lanes needed for GPUs; memory bandwidth required to feed those accelerators; and the platform architecture as a whole. The right CPU + accelerator combination determines final efficiency and cost for the target workload.

A balanced architecture for modern compute systems requires not only a powerful processor, but also a deep understanding of how components—from the chipset and memory controllers to interconnects and storage subsystems—interact. This integration determines whether a system can realize the theoretical potential of its CPU and accelerators in real-world data-center operation.

CPU comparison: AMD EPYC vs. Intel Xeon

The fundamental choice between the two dominant x86 platforms remains central when designing most infrastructures. A direct comparison of amd epyc vs intel xeon reveals not only differences in technical specifications, but also different approaches to solving modern compute tasks.

Performance and multithreading

AMD EPYC 9754

The flagship AMD EPYC 9754 based on the Zen 4c architecture embodies AMD’s strategy for maximum core density. With 128 physical cores and 256 processing threads, it sets a new standard for parallel workloads.

In a dual-socket configuration, the system delivers 256 cores and 512 threads, outperforming comparable dual-socket Intel Xeon builds by roughly 30% in multithreaded tests. This leap is enabled by the chiplet architecture, which allows core counts to scale cost-effectively. This makes the EPYC 9754 and similar models an ideal choice for high-density virtualization—where a single physical host can serve thousands of containers or virtual machines; for scalable databases distributing queries across many threads; and for HPC workloads that parallelize efficiently.

Intel Xeon Platinum (4-socket)

Intel’s response to the multithreading challenge is not only more cores per socket, but also support for higher socket counts. A four-socket Intel Xeon Platinum platform provides an extreme amount of aggregated cache and a shared address space, which is critical for very large in-memory database deployments such as SAP HANA, or complex ERP systems.

In this configuration, a system can aggregate 240+ cores. However, it’s important to note that performance gains are not linear when adding sockets due to increased inter-CPU communication latency (NUMA). Therefore, choosing a 4-socket Xeon platform is justified only for a narrow range of applications specifically optimized for such architectures and requiring an extreme unified memory capacity beyond what dual-socket systems can offer.

Architecture and scaling capabilities

Memory bandwidth

One of the key architectural differences is memory subsystem design. Latest-generation AMD EPYC processors feature 12 DDR5 memory channels per socket, while modern Intel Xeon Scalable CPUs offer 8 channels. In practice, this means an EPYC-based server can provide up to 50% higher theoretical peak memory bandwidth. For applications that are memory-intensive—whether DBMS platforms, analytics stacks, in-memory caches (Redis, Memcached), or scientific simulations—this parameter directly affects response time and overall system performance. A wider memory bus also helps “feed” compute cores more efficiently, minimizing idle time while waiting on data.

Data protection: Infinity Guard vs SGX/TDX

Hardware-level security has become a mandatory requirement for multi-tenant cloud environments and virtualization. AMD implements its security suite under the Infinity Guard brand, with Secure Encrypted Virtualization (SEV) as a key element. SEV transparently encrypts each virtual machine’s memory using a unique key, isolating one VM’s data from another—and even from the hypervisor. Intel offers a functionally similar but differently implemented technology called Trust Domain Extensions (TDX), which is part of the broader Software Guard Extensions (SGX) set. Both technologies aim to create secure enclaves (confidential computing environments), but their maturity, performance, and OS/hypervisor support can differ, requiring additional validation when choosing a platform for a specific project.

PCIe lanes and CXL support

Expansion and peripheral connectivity are determined by the number and generation of PCI Express lanes. Here, AMD EPYC traditionally leads by offering 128 lanes of PCIe 5.0 per socket. Intel’s 6th-gen Xeon (Granite Rapids) increased this metric by offering up to 96 lanes of PCIe 5.0 plus support for the Compute Express Link (CXL) interface. CXL is a rapidly evolving technology that uses the PCIe bus to build a coherent shared memory space across CPU, memory, and accelerators.

Intel adopted CXL 1.1/2.0 support earlier in its platforms, which can be an advantage for future composite infrastructures. For today’s workloads involving many NVMe drives, high-speed NICs (100/200 GbE), or multiple GPUs, AMD’s abundance of PCIe 5.0 lanes provides more room to scale.

The central takeaway from this platform comparison is that AMD EPYC more often proves to be the better choice for workloads prioritizing maximum compute density, high memory bandwidth, and efficient scaling within a node. Intel Xeon, in turn, retains strong positions where maximum single-thread frequency is required, where integrated hardware accelerators (QAT, AMX) matter, or where vertical scaling via multi-socket configurations is needed for legacy enterprise applications. The right choice is impossible without a clear understanding of the target workload’s characteristics.

Server platforms

The platform is an ecosystem that defines a server system’s capabilities and constraints beyond the processor itself. It includes the chipset, socket type, support for different memory types, and I/O interfaces. In 2025, the dominant x86 platforms are AMD’s Socket SP5 and Intel’s LGA 4677/Socket E. AMD’s SP5 platform, introduced alongside the EPYC 9004 series, is designed to support multiple CPU generations—giving investors confidence in future upgrades without replacing the motherboard.

It supports all key modern technologies: 12 DDR5 channels, 128 PCIe 5.0 lanes, and the Infinity Fabric interconnect for dual-CPU configurations. The Intel platform for 4th- and 6th-generation Xeon Scalable has also moved to DDR5 and PCIe 5.0, with a focus on accelerator integration and early CXL support for composable systems. Platform choice is a long-term decision that defines an infrastructure roadmap for the next 3–5 years.

Memory

The evolution of the memory subsystem is one of the main drivers of performance growth in modern systems.

DDR5

By 2025, DDR5 has become ubiquitous for new server deployments. Compared to DDR4, it offers not only higher frequencies (from 4800 MT/s and up), but also a fundamentally different architecture: each module has two independent 40-bit channels (32 data bits + 8 ECC bits), increasing effective bandwidth. Error-correcting code (ECC) is built into the standard, which is critical for data integrity in a data center. The transition to DDR5 on both platforms (AMD and Intel) is effectively mandatory, increasing upfront costs but establishing a foundation for performance.

DDR6

While DDR6 is in active development, its mass adoption in server systems is not expected before late 2025–2026. It is expected to deliver another significant leap in bandwidth and energy efficiency. Those planning major infrastructure investments should consider this technology cycle when evaluating deployment timelines and hardware lifecycles.

CXL

Compute Express Link (CXL) is not a new type of memory, but a revolutionary protocol built on the physical layer of PCIe 5.0/6.0. It allows the CPU to see memory installed on other devices (other CPUs, accelerators, specialized modules) as part of a single address space with low latency.

This opens the door to “shared memory pools” in the data center that can be dynamically allocated across servers and workloads, dramatically improving resource utilization. In 2025, CXL 1.1 and 2.0 are being actively adopted—primarily on Intel platforms—but AMD has also announced support in future generations.

Drives

Storage access speed often becomes a bottleneck. NVMe over PCIe 5.0, supported by modern server platforms, doubles bandwidth compared to PCIe 4.0, enabling high-performance SSDs to reach read/write speeds measured in tens of gigabytes per second.

For maximum performance and fault tolerance, RAID configurations of NVMe drives are used, requiring the CPU and platform to provide enough high-speed PCIe lanes. The evolution of storage standards is directly tied to the evolution of server processors and their I/O capabilities.

Practical use cases

Theoretical comparisons only matter when applied to concrete business tasks. Below is an analysis of the optimal CPU choice for typical scenarios.

High-load web servers

For web services, API backends, and content delivery services (CDN), the key factors are the ability to handle tens of thousands of concurrent connections and low latency. Here, CPUs with a high number of cores and threads have an advantage, as each connection or request can be efficiently served by a separate thread.

AMD EPYC, with its high core density and wide memory bus, can handle more requests per second on a single server. ARM processors such as Ampere Altra are also an excellent fit thanks to their energy efficiency and predictable per-core performance, which can translate into significant savings at the scale of a large data center.

Virtualization and containerization

Building dense virtual environments is a territory where AMD EPYC dominates. The number of VMs or containers you can place on a physical host is directly proportional to the available CPU threads and memory capacity. A 96- or 128-core EPYC can host 50%+ more virtual machines than a 60-core Xeon in a comparable server, directly reducing hardware costs, hypervisor licensing (if licensed per socket rather than per core), and rack space. Hardware VM encryption technologies (SEV/TDX) add an additional security layer for multi-tenant environments.

Databases and analytics

The right choice for DBMS workloads depends on the DBMS type. For transactional OLTP systems (for example, PostgreSQL, MySQL) that scale well across many parallel connections, multithreaded AMD EPYC and high memory-channel counts win again—enabling faster access to indexes and the buffer pool. For large analytical (OLAP) warehouses and in-memory DBMS solutions (for example, SAP HANA), overall memory bandwidth and capacity are critical. Here, both high-channel EPYC and multi-socket Xeon configurations may be relevant to reach maximum unified memory capacity.

Network services and encryption

For workloads such as software-defined networking (SDN), virtual routers (vRouter), firewalls, and VPN gateways, not only CPU performance matters, but also the presence of specialized hardware accelerators. Intel Xeon with integrated Intel QuickAssist Technology (QAT) provides significant acceleration for encryption/decryption and data compression, offloading CPU cores and increasing total throughput. For these purposes, Xeon can be preferable even if it trails in overall core count.

Conclusion

By 2025, the server CPU market has reached a new level of maturity and diversity, where there is no longer a single “best” solution for everyone. AMD EPYC has established itself as the leader in multithreading, memory bandwidth, and energy efficiency—offering maximum compute density for modern cloud and virtualized environments.

Intel Xeon retains strong positions thanks to a mature ecosystem, high single-thread performance, and a unique set of integrated accelerators for AI, encryption, and networking. In parallel, the ARM architecture—represented by players such as Ampere, NVIDIA, and AWS—forms a strong alternative in the niche of energy-efficient, scalable cloud and web workloads, promising meaningful total cost of ownership savings.

The key trend for the near future is further specialization. CPU choice will increasingly be determined not by abstract benchmark scores, but by a precise match to specific workload characteristics: memory access patterns, degree of parallelism, latency and security requirements, and deployment economics (TCO).

The adoption of technologies like CXL and tighter integration of CPU with GPU and other accelerators will blur the boundaries between components, turning the server into a unified, highly optimized compute platform. For IT architects and decision-makers, it is more important than ever to run deep workload analysis and build flexible, adaptable infrastructure capable of leveraging the strengths of each available architecture.

Frequently Asked Questions (FAQ)

- Question: What matters more when choosing a server processor—core count or clock speed?

- Answer: It depends on the workload. For parallelizable workloads (virtualization, rendering, web servers), core and thread count is critical. For legacy or poorly parallelized applications (some monolithic ERPs, older databases), high single-core frequency is more important. In most modern server scenarios, the former dominates.

- Question: Is it true that ARM processors are much more energy efficient than x86, and should they be considered for a data center?

- Answer: Yes—ARM CPUs can deliver up to ~40% better performance per watt in suitable workloads (web services, containers, caches). However, adoption requires validating the entire software stack for compatibility, as not all applications have native ARM builds. It’s a great choice for horizontally scalable, cloud-native environments, but can be challenging for legacy software stacks.

- Question: How does CPU choice affect software licensing costs (for example, VMware, Microsoft SQL Server)?

- Answer: Critically. Many enterprise products are licensed by the number of physical or virtual cores. As a result, deploying a server with 128 AMD EPYC cores can lead to huge licensing costs compared to a 64-core Intel Xeon server—even if the former is more powerful. Before buying hardware, be sure to analyze the licensing model of your target software.

- Question: Can desktop processors (Intel Core / AMD Ryzen) be used in servers?

- Answer: Technically—yes, for test benches, home labs, or non-critical tasks. For production environments, it’s strongly discouraged. Server CPUs (Xeon, EPYC) provide required features: ECC memory support for error correction, more PCIe lanes for expansion, advanced reliability features (RAS), and a long support lifecycle. Lacking these in desktop chips threatens stability and data integrity.

- Question: What is CXL and why is it important for the future?

- Answer: Compute Express Link (CXL) is an open high-speed interconnect standard that allows CPUs, memory, and accelerators to share resources efficiently with low latency. In the future, CXL will enable “composable” servers where memory and acceleration resources are dynamically allocated from shared pools, dramatically improving data-center flexibility and utilization. It’s one of the key emerging technologies.

Additional resources and publications

For deeper study, consider the following types of resources:

- Official vendor websites: AMD EPYC, Intel Xeon — for accurate specifications, official white papers, and roadmap statements.

- Independent benchmarking platforms: SPEC, with performance and power-consumption test results for comparing processors under standardized conditions.

- Technical analytics media and communities: resources like Habr, Tom's Hardware, AnandTech, and ServeTheHome publish in-depth technical reviews and architectural analysis.

- Forums and communities of system administrators and engineers: practical experience deploying and operating different platforms in real-world environments, discussion of issues and solutions.